This article is available in audio format, click play above to listen to the article.

The debate for use of AI within organizations and the required policies, specifically how to align AI governance policies with organizational objectives, as well as balancing the need for strict regulation with innovation in AI Initiatives to manage it, has been a popular topic of discussion.

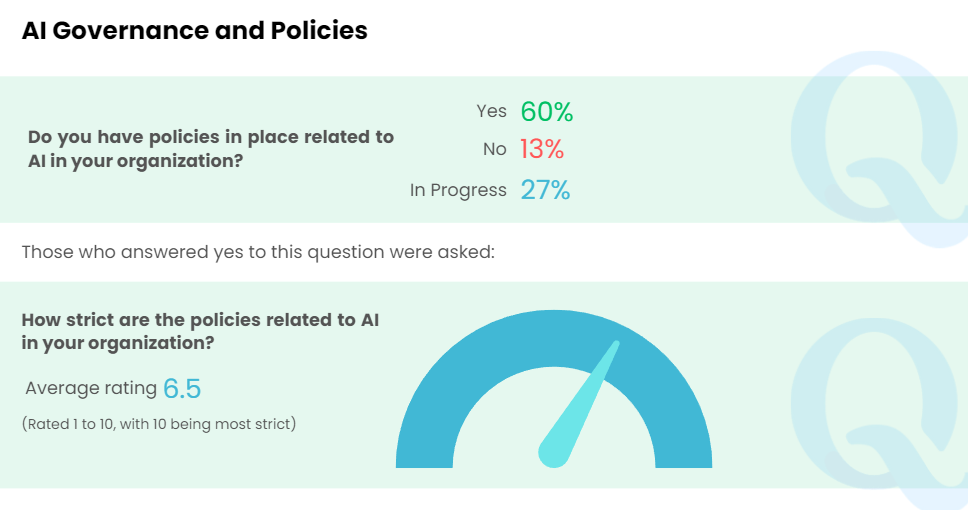

As part of the Cyber Security Tribe annual state of the industry survey, over 250 cybersecurity professionals were asked if they had policies in place related to AI in their organization, and if so, give a rating of 1 to 10 (10 being the most strict) of how strict are the policies.

This article is an extract from the wider 2024 State of the Cyber Security Industry Report, which is available to download and provides you a benchmarking tool when reviewing your priorities and needs for the remainder of 2024 and into 2025.

Aligning AI Governance Policies with Organizational Objectives

Many companies seeking to adopt AI in their operations have started machine learning and AI experiments across their business. Before launching more pilots or testing solutions, it is useful to step back and take a holistic approach to the issue, moving to create a prioritized portfolio of initiatives across the enterprise, including AI and the wider analytics and digital techniques available.

Policy makers will need to strike a balance between supporting the development of AI technologies and managing any risks from bad actors. They have an interest in supporting broad adoption, since AI can lead to higher labor productivity, economic growth, and societal prosperity.

Opening public-sector data can spur private-sector innovation. Setting common data standards can also help. AI is raising new questions for policy makers to grapple with, for which historical tools and frameworks may not be adequate. Therefore, some policy innovations will likely be needed to cope with these rapidly evolving technologies. But given the scale of the beneficial impact on business, the economy, and society, the goal should not be to constrain the adoption and application of AI but rather to encourage its beneficial and safe use.

Balancing the Need for Strict Regulation with Innovation in AI Initiatives

Organizations can instead ensure the responsible building and application of AI by taking care to confirm that AI outputs are fair, that new levels of personalization do not translate into discrimination, that data acquisition and use do not occur at the expense of consumer privacy, and that their organization balances system performance with transparency into how AI systems make their predictions.

While stricter regulations may increase costs associated with developing, testing, and monitoring AI systems, well-crafted policies will push the security industry toward more responsible AI practices that consider ethics and potential harm.