As SOCs evolve toward agentic automation, their structure, workflows, and professional roles will change substantially. The transition is not a replacement of human expertise but a transformation of where and how it is applied. The AI-augmented SOC represents a new balance between human insight, intelligent automation, and adaptive decision-making.

This article is an extract from the report: Automating and Modernizing SOC with Agentic AI - which is available to download.

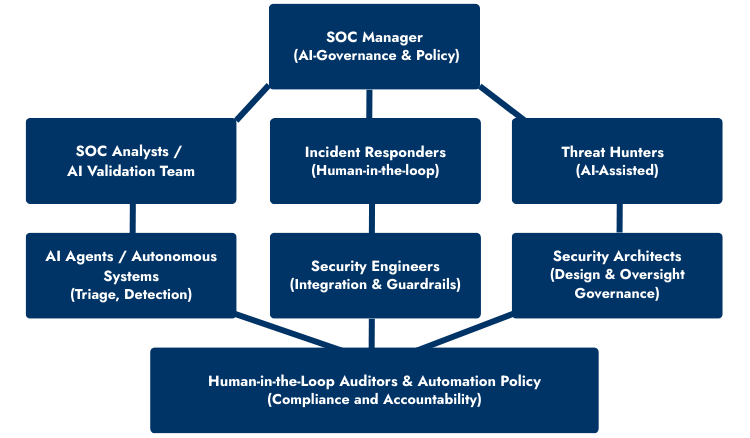

Agentic AI SOC Organizational Structure

In the traditional model, SOC teams are organized around a linear escalation hierarchy: Tier 1 handles triage, Tier 2 conducts deeper investigation, and Tier 3 performs advanced threat analysis. The Agentic AI SOC replaces much of this tiered model with a collaborative network of AI-driven and human-supervised functions.

Automation agents handle continuous telemetry ingestion, triage, and enrichment. Human operators validate critical decisions, refine models, and manage complex investigations. Oversight is provided by governance roles responsible for ensuring transparency, accountability, and ethical operation.

This new architecture is not strictly hierarchical. It functions as an adaptive ecosystem where both humans and AI systems operate in loops of feedback and validation.

Evolution of SOC Roles

Each existing SOC role will change as AI capabilities expand:

SOC Manager - The SOC Manager’s role will become increasingly strategic. Instead of coordinating manual workflows, the focus will shift to supervising AI-human collaboration, setting automation policies, and defining acceptable risk thresholds. The manager will ensure alignment between automated actions and business priorities, while also overseeing governance, compliance, and resource allocation.

SOC Analysts - Traditional Tier 1 analysts will see their repetitive triage duties automated. Their new responsibility will focus on validating AI outputs, refining detection logic, and interpreting patterns surfaced by agents. These professionals become “AI Validation Analysts,” ensuring that automated triage aligns with real-world context.

Incident Responder - Incident Responders will work closely with AI systems capable of executing initial containment or remediation steps. Their role will change toward supervising autonomous response playbooks, verifying outcomes, and coordinating communication across affected business units. Human responders remain essential in handling high-impact incidents where AI actions require confirmation or contextual interpretation.

Threat Hunter - Threat Hunters will use AI as a force multiplier, employing agents that continuously search for anomalies and emerging tactics across datasets. The human role shifts toward hypothesis creation, validation, and adversarial reasoning, areas where creativity and intuition remain irreplaceable.

Security Architect - Architects will take on a governance function, designing end-to-end frameworks that balance automation, human oversight, and compliance requirements. Their responsibility extends to defining secure design patterns for agent collaboration and ensuring that SOC architecture remains explainable, scalable, and defensible during audits.

Security Engineer - Engineers will focus on integrating AI systems within the broader security stack, ensuring interoperability between tools, telemetry pipelines, and automation layers. They will design guardrails, configure agent permissions, and maintain the integrity of underlying infrastructure supporting AI decision-making.

New Roles in the Agentic SOC

As AI-driven functions mature, new positions will emerge to sustain operational confidence:

- AI Validation Analyst – Reviews, verifies, and approves decisions made by AI agents. Ensures that autonomous triage aligns with contextual intelligence and organizational policy.

- Human-in-the-Loop Auditor – Monitors AI-driven workflows, maintains audit trails, and provides independent oversight to ensure transparency and accountability.

- AI Systems Engineer – Specializes in maintaining the operational health of agentic frameworks, model retraining, and telemetry integration.

- Automation Policy Lead – Defines thresholds for autonomous actions and manages escalation protocols between AI and human operators.

- AI Behavior Auditor – Audits, tests controls, validates behaviors in alignment with the context of the AI charter for SOC teams.

- AI Chief Compliance Officer - Monitors and responsible for establishing the policy for applicable laws and/or regulatory frameworks that govern the system-to-system AI use.

These roles ensure that the SOC remains human-led while benefiting from the speed and precision of automation.

Mitchem Boles, Field CISO for Intezer, states "Foundational transformation of an AI-Adapted SOC allows human experts to propagate diverse skills through expanded roles to drive real and meaningful progress in securing an organization proactively."

Below is a potential AI-Augmented SOC Structure where AI systems handle scale and speed, while humans maintain judgment, governance, and accountability.

A potential AI-Augmented SOC Structure

Building Trust in Agentic AI SOC

The successful adoption of Agentic AI in the SOC depends on sustained trust between humans and automated systems. That trust rests on transparency, accountability, and demonstrable performance.

To achieve this, organizations should implement:

- Explainable AI mechanisms that make decision logic visible to analysts and auditors.

- Immutable audit logs recording all AI-driven actions and their justifications.

- Governance frameworks that define when and how AI systems can act autonomously.

- Training programs ensuring SOC staff understand both the capabilities and limits of the technology.

Over time, trust is reinforced through consistent, verifiable outcomes that prove the value of automation without eroding human control.

Share this

You May Also Like

These Related Stories

Why You Should Be Using An Enterprise Browser

CISOs Discuss What It Really Takes to Implement Zero Trust